For my birthday a while back, a friend of mine gave me food

coloring, a box of borax and spools of thread. I asked what they were for, and

she said crystals.

Fig. 1: Borax crystal growing birthday gift (orig.)

Of course! I hadn’t ever seen people grow borax crystals before, only sugar or salt, so I looked up a tutorial on YouTube. The procedure was pretty standard: heat water up to near boiling, dissolve the borax, insert a pipe cleaner or thread and wait overnight for the solution to cool down and precipitate out crystals. But while the procedure is simple, the science behind nucleation is more complicated and pretty interesting.

Fig. 1: Borax crystal growing birthday gift (orig.)

Of course! I hadn’t ever seen people grow borax crystals before, only sugar or salt, so I looked up a tutorial on YouTube. The procedure was pretty standard: heat water up to near boiling, dissolve the borax, insert a pipe cleaner or thread and wait overnight for the solution to cool down and precipitate out crystals. But while the procedure is simple, the science behind nucleation is more complicated and pretty interesting.

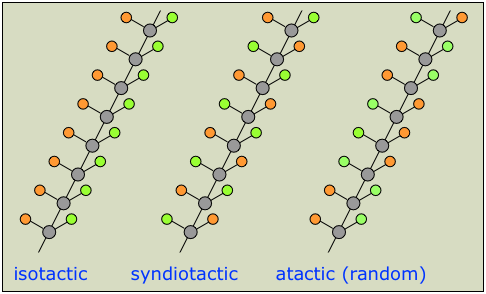

In order for a crystal to form in solution, molecules of a

substance must conglomerate to an adequate size to encourage the spontaneous coordination

of more molecules. A cluster of this adequate size is called a "nucleus," and

the process of its formation is called "nucleation." Clusters that are not large enough to be nuclei are called "embryos." The nucleation process can

be described in terms of Gibbs free energy, which accounts for both enthalpy,

related to the nucleus’ internal energy, and entropy. Gibbs free

energy is given by the equation

1.

ΔG=ΔH-TΔS (H is enthalpy, T

is temperature in Kelvins and S is entropy)

For a newborn crystal born homogenously (meaning suspended

in a medium without contact with other surfaces), there are two main energy changes

that are occurring. The first is a lowering of molecular energy due to the

formation of attractions between coordinating substance molecules for reasons described

in Why Cold Drinks "Sweat". This change in energy can be describe by the equation

2.

ΔG=VΔGv (V is

volume, ΔGv is the change in internal energy per unit volume)

To find ΔGv, we will assume that the

crystal-solution system is cooled to slightly below the substance's melting point, Tm.

At this small undercooling, ΔH and ΔS can be approximated as temperature

independent [1]. But first, at Tm the difference in free energy ΔG

between a substance’s solid and liquid forms is 0. Therefore,

3.

ΔGm=ΔH-TmΔS=0

4.

ΔSm= ΔH/Tm

Now, assuming that ΔH and ΔS are temperature independent, we

can use the same expression for entropy as used at Tm to find the

value of ΔGv. While we’re at it, let’s assume the crystal nucleus is

spherical for simplicity’s sake. The following are therefore true:

5.

ΔGv=ΔHfv-TΔS=

ΔHfv-T(ΔHfv/Tm)= ΔHfv(Tm-T)/

Tm= ΔHfvΔT/Tm (from equations 1 and 4)

6.

ΔG=VΔGv=4/3πr3(ΔHfvΔT/Tm)

(from equations 2 and 5, Vsphere=4/3πr3)

The second energy change occurring is an increase in a

newborn crystal’s energy because the surface of the crystal is disrupting

bonding in the liquid medium around it. This change can be described as

7.

ΔG=Aγs=4πr2γs

(A is the nucleus’ surface area, γs is the surface free energy

value

characteristic of the medium-solid interface)

characteristic of the medium-solid interface)

This expression is just the disruption free energy per unit

area multiplied by the surface area of a sphere. Together, these two energy

changes dictate nucleation. Putting the two expressions together, we get

8.

ΔGhom=VΔGv+Aγs=

4/3πr3ΔHfvΔT/Tm+4πr2γs (ΔGhom indicates that this equation is for

homogenous nucleation)

homogenous nucleation)

This equation is very useful and can be used to describe

nucleation events from borax crystals precipitating to homogenous cloud

formation. To tease a little more information out of equation 8, let’s see how ΔGhom

changes in response to a substance clump slowly growing in radius. This rate

of change corresponds to the derivative of ΔGhom with respect to

radius r [2];

9.

d(ΔGhom)/dr=4πr2ΔGv+8πrγs

The point where ΔGhom no longer changes with r

corresponds to the peak on the following graph of equation 8 and its component

parts, equations 2 and 7.

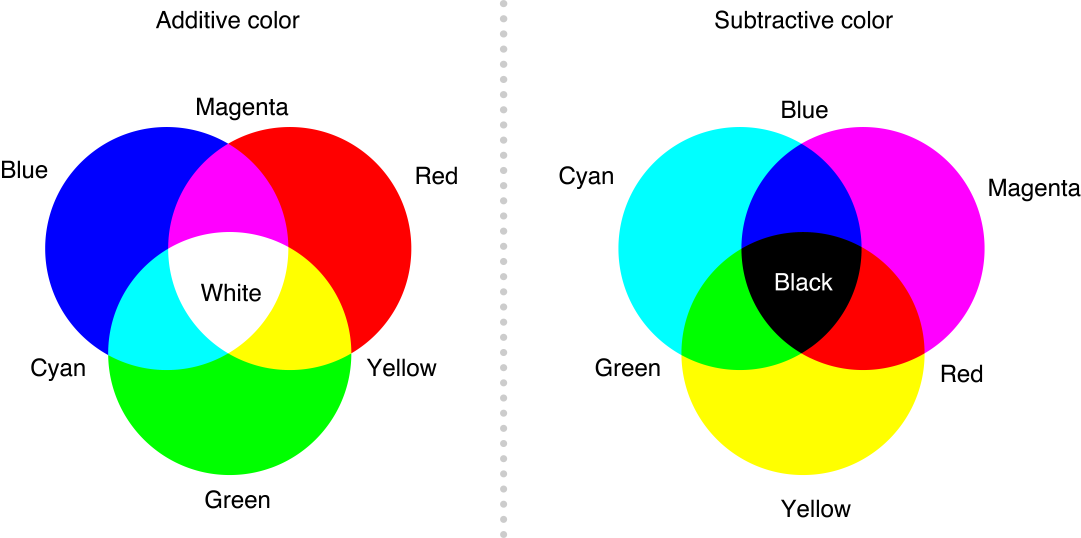

Fig. 2: Free energy of nucleation as radius increases (Materials Science and Engineering, an Introduction)

Fig. 2: Free energy of nucleation as radius increases (Materials Science and Engineering, an Introduction)

Equation 2 is shown as a decreasing curve because ΔHf is

negative for crystallization. This makes sense because as a material crystallizes,

energy is lost to its surroundings, so ΔHf must flow out of the

system. To find the critical nucleation radius,

10.

d(ΔGhom)/dr=4πr2ΔGv+8πrγs=0

(from equation 9)

11.

r*=2γs/ΔGv

Plugging the critical radius into

equation 8 and extracting the negative from ΔHf to avoid confusion, we get

12. ΔG*hom=-4/3π(2γs/ΔGv)3ΔGv+4π(2γs/ΔGv)2γs=16/3πγs3/ΔGv2

This expression describes the

magnitude of the energy change needed to get a molecular cluster up to the size

of the critical radius r*, a sort of activation energy [3].

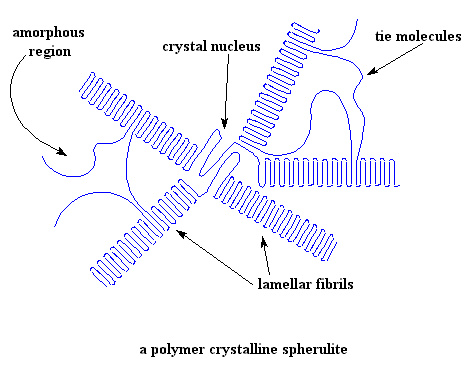

However, homogenous nucleation is relatively difficult compared

to heterogenous nucleation, as I’m sure you’ve probably heard. Heterogenous

nucleation occurs when a nucleus forms on a surface, such as a dust particle

for clouds or thread for nucleating borax crystals. Because of the complexity of

finding heterogenous nucleation equations due to complex volume and surface area

terms as well as extra surface energy considerations, I will not be posting these

calculations. The calculations can be found in reference 3, but the summarized result is

that the critical radius in heterogenous nucleation remains the same as with

homogenous nucleation while the nucleation free energy lowers and makes the

nucleation process more accessible. This is why minimizing dust or rough

surfaces is important for growing larger crystals rather than clusters of small

ones.

What has been described above is just a small bit of the complexity

of crystal growing. Knowledge of nucleation rates and crystal growth is widely

used in processes such as tuning metals to have properties fit for specific

purposes or growing single-crystal silicon for computer processors, and I’m

sure this information will come up again on later posts. But mild difficulty of

math aside, it’s cool stuff, huh? As a treat for reading through this post, watch this fun Minute Earth video on the homogenous nucleation of clouds and see if you recognize some of the concepts we discussed.

I’m planning on growing some borax crystals soon, and when I

do I’ll likely write an experiment post about it so be sure to come back and

check that out. I’ve already had my first few days of classes and so far it

seems that I will be able to continue posting once a week, likely on Sunday or Monday.

As always, thanks for reading and I’ll be posting new stuff soon!